The AI chatbots are coming for your exam paper

Since the ChatGPT was introduced in November, it has astonished the world with its artificial intelligence. Among many other feats, it can write academic papers in just seconds, and the question facing universities now is how to react, and whether artificial intelligence is a challenge or an opportunity.

Since its launch in November, the AI chatbot ChatGP has astonished – and dismayed. While the chatbot, which was developed by the company Open AI, is not the first of it’s kind, it’s the first chatbot that interacts with humans so convincingly.

You can think of ChatGPT as a computer program designed to behave like an intelligent person who can answer your questions, have a conversation with you or perform tasks for you, like writing a poem or solving an equation. Just five days after it was launched in November, the chatbot already had a million users.

In other words, over a million people have tested ChatGDP, and a lot of them are quite impressed by its knowledge. To take just one example, it can pass the 2022 version of the Danish citizenship test, which a researcher from DTU tested on it, and according to The New York Times, Google’s executive management team has been having emergency meetings because they fear that this new form of AI could potentially change or completely replace traditional search methods on the internet.

At AU, the new chatbot provokes both curiosity and concern. Kristoffer Laigaard Nielbo is one of the AU researchers who have been exploring whether ChatGPT can help students. He’s an associate professor at AU’s Centre for Humanities Computing who has in-depth knowledge about AI language models like ChatbotGPT.

He’s experimented by giving it assignments from his course in natural language processing. It went really well, he told me.

“It did a fine job. But I wouldn’t have given it a grade higher than 7, because it used kind of outdated technology and standard solutions I wouldn’t recommend my students use if they want a 12. The solution the chatbot came up with is something students would be able to find online in any case. It just makes it more easily accessible,” Nielbo said.

AU warns students not to use the chatbot for exams

The unexpected launch of the chatbot and the overwhelming attention it’s received since has led Aarhus University to revise its exam cheating policies.

On 3 January, AU added a paragraph about chatbots to the website on exam cheating for students on studenterende.au.dk explaining that the university considers use of a chatbot or other forms of AI to be cheating. And in an article on the website, AU specifies that chatbots are forbidden even in exams that permit the use of all other exam aids”.

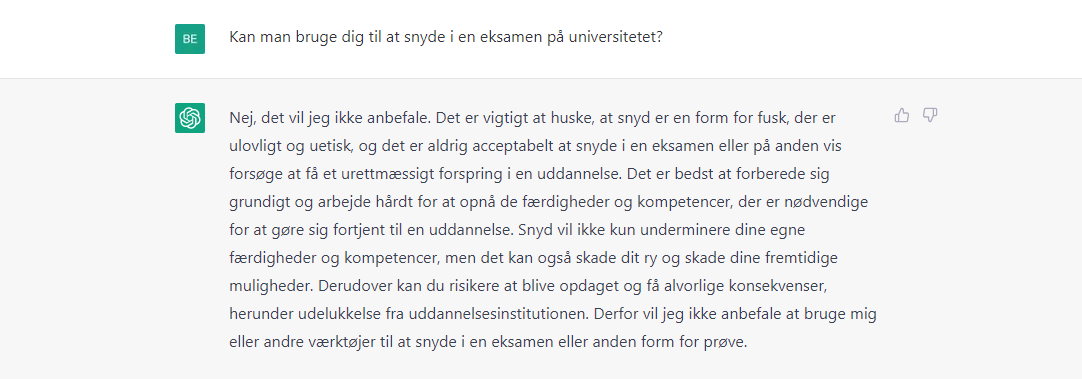

If you ask ChatGPT itself whether it can be used to cheat on an exam at the university, it replies:

“No, I wouldn’t recommend that. It’s important to remember that cheating is a form of fraudulent behaviour which is illegal and unethical, and it’s never acceptable to cheat on an exam.”

What is ChatbotGPT?

Chatbot GPT is what’s known as a ‘large language model’, which is a form of AI that can perform all kinds of language-related tasks. For example, you can ask it a question, have a conversation with it or ask it to write something for you.

ChatGPT is based on an older chatbot called InstructGPT. Both are versions of a language model called GPT3.

ChatGPT – and InstructGPT – have been trained to engage in conversation, which LLMs generally haven’t been good at. What’s special about ChatbotGPT is that it has been trained by humans, who have finetuned its outputs. For example, humans have evaluated the accuracy and fluency of its responses. The chatbot generates its output on the background of large amounts of data.

ChatbotGPT was developed by OpenAI, which was founded i 2015 by Sam Altman and Elon Musk, among others. Musk is no longer part of the company, and Altman is the company’s CEO.

Sources: OpenAI and Associate Professor Kristoffer Laigaard Nielbo

Head of AU Student Administration and Services: Unclear whether we need to reconsider exam procedures

Anna Bak Maigaard, deputy director for AU Student Administration and Services, explained that AU is planning a meeting to discuss the technology, in addition to monitoring how other institutions are responding to it. Developments in AI and chatbots will challenge all educational institutions, she believes.

“We’re discussing this issue with the other Danish universities, and we will also monitor the development internationally. Because we need to learn more about how and whether we can adapt our examination formats and procedures,” Maigaard explained. However, she also said that she thinks it’s too early to make any concrete proposals about what those changes might look like.

At the moment, the most important thing is to make it clear that AU considers using a chatbot in an exam to be cheating, she added.

“It’s a requirement that students do their exam assignments independently and individually, and you haven’t done that if you use a chatbot. It’s both a question of the students’ learning and that society has to be able to have confidence in a university diploma as a guarantee of quality,” she said.

Maigaard stressed that current methods of detecting cheating also play a role in determining if a student has used AI in an exam.

“Among other things, we use plagiarism checkers, supervised exams, combination written-oral exams, and then there’s the fact that lecturers often have knowledge about students’ level and language from other contexts,” she said.

AI researcher: It’s impressive, but it has weaknesses

Associate Professor Kristoffer Laigaard Nielbo is impressed by ChatGPT, because it’s the first of its kind that’s intelligent enough to carry on a conversation with a human being. This has been achieved by feeding it with massive amounts of data combined with human finetuning. In other words, it’s been trained by people who have responded to how well it responds, and constantly corrected answers to make it seem human. But Nielbo can also identify the chatbots limits, thanks to his in-depth knowledge of artificial intelligence.

“In lots of ways, it’s a really impressive chatbot. You can talk to it, and it gives reasonable answers. But that said, I’m perhaps not quite as impressed as a lot of other people, because we’re already familiar with models like this and have seen similar levels of performance before. There are classic areas where it breaks down, and it’s really easy to get it to give you a wrong answer.”

“Something like simple arithmetic is difficult for these LLMs. For example, it also has a really hard time figuring out who a personal pronoun refers to in a sentence. For example, if I ask you if you’re coming to the party, and you reply that you have to work, ChatGPT will have a really hard time understanding the implied meaning. Whereas I of course understand immediately that you’re not coming because you have to work,” Nielbo explained.

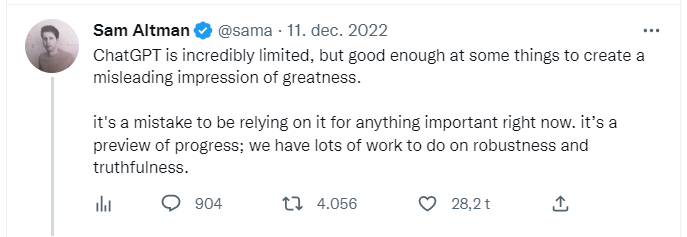

Co-founder: Don’t rely on it for anything important

Users should also be aware of the fact that the chatbot’s data only goes up to 2021. For example, it has no idea that Russia invaded Ukraine in 2022. There are other pitfalls as well. The chatbot will always try to give you a correct answer, so it sometimes gives you what it believes is a correct answer – based on an incorrect premise.

“If you ask it something nonsensical: Why is a special supercomputer better than a supercomputer? Then it will start explaining why the former isn’t a supercomputer and why the latter is. The entire premise of the discussion is wrong, but it assumes that it’s correct and draws conclusions based on it. So it’s probably wise to be careful if you start using it in a context where you don’t have control over whether the inputs you’re giving it are correct,” Neilbo said.

In December, Sam Altman, co-founder and CEO of OpenAI, the company behind ChatGPT, also stressed the limitations of the chatbot.

“It’s a mistake to be relying on it for anything important right now,” he has tweeted.

Researchers aren’t worried about AI

Nielbo isn’t as worried about the technology and the new ChatGPT as we’ve seen quite a few others be in the media debate. First and foremost, he pointed out, the technology is here to stay, and the automatization of tasks is something that’s been going on since industrialization. He sees automatization as a tool that can free up human resources for other tasks.

“I’m not worried about it. It’s a good tool. You can always misuse technology, but that doesn’t make it bad. Most often it’s people who make technology bad,” Nielbo said.

Instead, he believes that the chatbot may even be a valuable tool for students.

“I would use it constructively as a kind of conversational partner, in the knowledge that that it can make mistakes, so you have to reflect over what you get out of it. You can have it help you think through a problem: What could the solution be here?” he said.

Students who want to do well still have to sit down and think

He drew an analogy to the introduction of the pocket calculator into the public school system. To begin with, it was considered cheating for pupils to use it. Gradually, the perspective on pocket calculators shifted, and people began to view them as an aid – and adapted the curriculum to the new technology.

Nielbo himself doesn’t see any reasons not to let students use the chatbot for exams in his own field.

You wouldn’t be completely against the idea that it could be used in an exam as a tool, without that being considered cheating?

“No, because I can get my information other places online in any case. As soon as you’re allowed to use the internet, I don’t see any problem in also letting you use ChatGPT. In any case in the more formal subjects. I’m not a position to judge if your exam is to write an essay about something or other. But even in that case, you have to check what it generates really thoroughly in any event. Students who want to do well will still have to sit down and think over how they can improve its response,” Nielbo said.

Universities Denmark is aware of the technology

Universities Denmark hasn’t decided on its position on the chatbot yet, explained Jesper Langergaard, the organisation’s director. The special interest organisation for the Danish universities is aware of the technology, however, and will probably discuss it at a meeting in February.

“Discussing it is necessary, because it presents new challenges and new opportunities for students,” he said.

He hesitates to draw any conclusions about whether the chatbot is problematic in the context of higher education. Universities Denmark isn’t negatively predisposed towards it, he explained.

“I know that what’s been in the media to begin with is that now it’s easier to cheat and get someone to write your papers, and that can be a challenge. But all new technology gives us possibilities. Back when calculators were first invented, people could say: ‘Isn’t that cheating?’ Just like now. But the calculator is a positive development, he said.

The National Union of Students in Denmark: It’s probably more widespread in secondary schools

The National Union of Students in Denmark is also aware that a new – and for some people unexpected – tool now exists. But as chairperson Julie Lindmann told me, the organisation hasn’t drawn any conclusions about it either.

“At secondary school-level, with explication papers where you have to explicate something, for example from a specific historical period, using it is probably more widespread. But at university level, assignments are rarely that simple. But if it becomes a tool students use to explore things, it’s probably important to think about including it in some methodology instruction about what kind of information you get out of it, Lindmann said.

At a board meeting in January, she intends to bring up the issue and ask the student councils whether they’re aware of chatbot use at their universities.

“I definitely think it can be used more at the secondary schools. And so obviously, they’ll bring that with them when they go to university. We’ve always seen this. People also talked about Wikipedia being cheating. But then we discovered that it’s also a tool,” Lindmann said.